To perform the wordcount program in Hadoop framework we need following software.

First you have to download the setup:

1. cloudera-quickstart-vm-5.13.0-0-vmware (mandatory)

2. putty-64bit-0.83-installer (optional) putty: to make connection with Hadoop cluster

3. Filezilla_3.6.0.6_win32-setup (optional)

FileZilla: Data transfer from local to Hadoop machine and Hadoop to local all over world. For that it uses a network

Once you have setup of cloudera-quickstart-vm-5.13.0-0-vmware

1. Goto the folder of cloudera-quickstart-vm-5.13.0-0-vmware and then search file.

2. cloudera-quickstart-vm-5.13.0-0-vmware (3kb size) of type VMware virtual machine configuration just double click on it.

3. Your Cloudera virtual machine gets start with Centos 6.7 operating system.

4. Now open Terminal and just do some basic commands in terminal as follows.

5. type command: [cloudera@quickstart ~]$ jps

//this command shows us java processes for that perticular user.

6. Now here will see only one or two processes with process id and process name. because it's not a super user.

7. Now just check with super user to do this type command:

[cloudera@quickstart ~] $ sudo su

8. You will get the prompt as follows with processes.

9. Now you can observe here user gets change now it's a root user and root are having all privileges.

10. Here you will get all the services with process id and process name.

Hadoop Architecture Overview

Hadoop is composed of two main components:

-

HDFS (Hadoop Distributed File System) – Storage layer

-

MapReduce – Processing layer

HDFS Architecture (Storage Layer)

Master Node:

-

NameNode: Manages metadata (file names, block locations, permissions)

-

Secondary NameNode: Not a backup but a helper that periodically pulls metadata from the NameNode and compacts it

Slave Nodes:

MapReduce Architecture (Processing Layer)

Master Node:

Slave Nodes:

Note: In newer versions of Hadoop (Hadoop 2.x+), YARN replaces JobTracker and TaskTracker with ResourceManager and NodeManager, respectively. But Hadoop 1.x JobTracker/TaskTracker, I’m sticking to here.

Reducer in MapReduce

-

A Reducer takes the output from the Mapper, aggregates/interprets the results, and writes the final output to HDFS.

Figure: Hadoop Internal Working

11. Resourse Manager + NodeManager(MapReduce responsible /Master)

NameNode + DataNode + SecondaryNameNode (HDFS responsible / Slave)

NodeManager reports to the ResourseManager. one RM manages 4 NodeManager(every machine is having its own nodemanger)

ResourseManager(ip address of that machine put as a RM in NM machine for reporting is a Master and NodeManager is slave.

NameNode Page

Hadoop works in cluster if there are many clusters then all above components works in cluster, so they required their own id to identify. so, every cluster is having its own cluster id (1-2-3-4) clusterid:11 (5-6-7-8) clusterid:12. NameNode gives the cluster id12. Now let's do some basic commands in Centos 6.7 using terminal

13. How to create, remove, view, list file and folder using command.

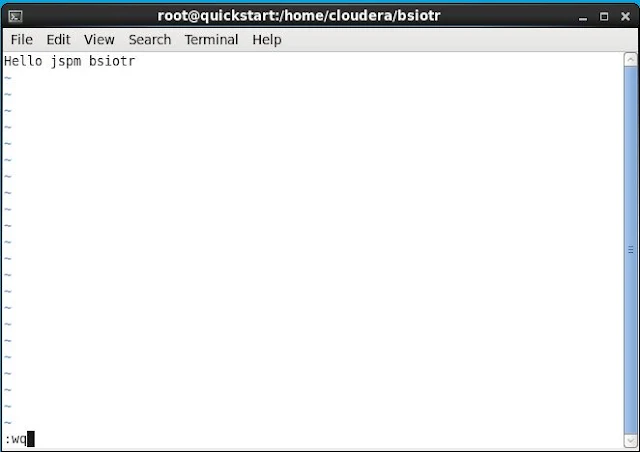

14. By using vi command we can open already existing file or if file does not exist it will create it and open it in vi editor

15. To write something in it first of all we have to go in insert mode so press i

16. then you have to write some text in it like hello jspms bsiotr

17. Now to save this file and exit press Esc button first and the type :wq

18. using touch command also you can create file and using rm command you can remove it.

19. how do i move one folder into another folder using command in terminal

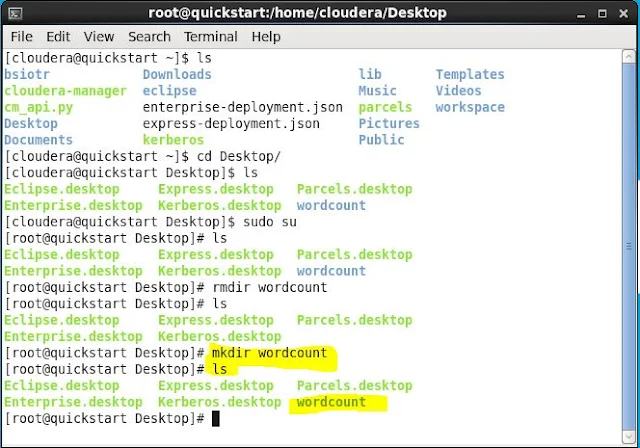

20. Now moving towards the wordcount assignment:

We need three (3) java programs files inside wordcount folder in hdfs,

So first create a folder $ mkdir wordcount & then store this file code in this folder.

1.WordCount.java

2.WordMapper.java

3.SumReducer.java

21. Let's explain each file logic in detail along with code. First Let's discuss with WordCount.java file logic.

a. When we run the code at that time we are passing two argumnets on command line, if args.length is not equal to two then it will return -1. means no code execution we have to provide appropriate command.

b. Create new Job and setJarbyClass, setJobName

c. Set input and output path

d. Set Mapper and Reducer Class

e. Sets map output key class and output value class.

f. Final Output as key, value pairs.

From the above steps we come to know that WordCount. java acts as a driver class or configuration class.

22. WordMapper.java code as follows.

Explanation:

From the above codes we come to know that in line number 12 we are taking String s, after that we are separating word from that whole string by using split (" \\W+") function iteratively. while doing this simultaneously we are checking length of each word if it is greater than 0 then we are emitting the output of mapper like (word, 1).

23. SumReducer.java code as follows.

Explanation:

From the above codes we come to know that in line number 12 we are initializing the wordCount = 0. Then iteratively performing the summation of all the value. Finally output the key and its exact count as an output to the client.

How to execute the above code?

Step 1: Compile all the java classes present inside the folder. while doing this we included all Hadoop classes at the time of compilation. *.java means all java files.

and the compilation command is:

[root@quickstart WordCount]#

javac -cp /usr/lib/hadoop/*:/usr/lib/hadoop-mapreduce/*:/usr/lib/hadoop-0.20-mapreduce/* *.java

If we carefully, observe the above compilation command you come to know 3 .class files gets created.

Step 2: Now we want to wrap all the .class files into one package that is called as jar file.

and the jar formation command is:

[root@quickstart WordCount]# jar -cvf filename.jar *.class

Step 3: Now we want to provide input file to the measure the wordcount. for this we have created one folder wordcount on hdfs. now the input file UN.txt we want to put inside hdfs so to do this we are using a command. hdfs dfs -put UN.txt /wordcount

means you're uploading a local file named UN.txt into HDFS (Hadoop Distributed File System).

Explanation of commands:

hdfs dfs: This is the command-line interface to interact with HDFS.

-put: This tells Hadoop to copy a file from the local filesystem to HDFS.

UN.txt: This is the local file you want to upload.

/wordcount: This is the destination directory in HDFS where you're placing the file.

Step 4: To run a MapReduce job in Hadoop. to run this, we have to use following command:

hadoop jar wc.jar WordCount /wordcount/ /wc_opMarch

“Run the WordCount class from wc.jar, process the files in /wordcount/, and save the output in /wc_opMarch.”

Explanation:

hadoop jar: This tells Hadoop to run a JAR file that contains a MapReduce program.

wc.jar:

This is the name of the JAR file containing your MapReduce code (most likely a WordCount program).

WordCount:

This is the main class inside the JAR file which contains the main() method to be executed.

/wordcount/:

This is the input path in HDFS. It should contain the file(s) to be processed — in this case, likely the UN.txt you uploaded earlier.

/wc_opMarch:

This is the output directory in HDFS where the results of the MapReduce job (word counts) will be stored.

Step 5: Now we want to check output that is wordcount.

Note: You reach up to bottom means you are succeeded. if you really think the provided material is worth and you gain little bit knowledge from this blog. Please do write your feedback for the same in comment.

No comments:

Post a Comment